Quick overview of DRBD

DRBD synchronizes data at the block device (typically a spinning or solid state disk) – transparent to the application, database and even the file system. DRBD requires the use of a journaling file system such as ext3 or ext4. For this solution it acts in an active-standby mode – this means that at any point in time the directories being managed by DRBD are accessible for reads and writes on exactly one of the two hosts and inaccessible (even for reads) on the other. Any changes made on the active host are synchronously replicated to the standby host by DRBD.

Pre-Requisites

Two servers, each with:

* Redhat/Centos 6.3

* Network connectivity

* MySQL 5.5 or later

* Unpartitioned space on the local disks to create a DRBD partitions, in this example I would use LVMs as DRBD backing device.

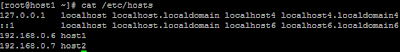

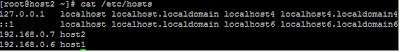

For simplicity, servers are named as host1 and host2.

host1: 192.168.0.6

host2: 192.168.0.7

Following configuration files are created:

/etc/hosts (host1)

/etc/hosts (host2)

Ensure DRBD user-land tools are installed

Firstly use yum to check that they aren’t already there (if “Repo” is set to “installed” then it is already installed):

Check if kmod-drbd84 is installed. Please ensure that both modules should have same version number:

These packages don't come in the default yum repository, so you may have to download them:

wget http://elrepo.org/linux/elrepo/el6/x86_64/RPMS/kmod-drbd84-8.4.1-1.el6.elrepo.x86_64.rpm

wget http://elrepo.org/linux/elrepo/el6/x86_64/RPMS/drbd84-utils-8.4.1-1.el6.elrepo.x86_64.rpm

And install it if not :

yum install drbd84-utils kmod-drbd84 -y

The same steps should be performed on host2

Configure DRBD/FileSystem

Confirm that you have a volume group available to spring LVMs

In this case I have Volume Group 'vg0' that has free 3Gb space. I would create two lvms on both hosts:

[root@host1 ~] lvcreate --name data --size 1G vg0

[root@host1 ~] lvcreate --name logs --size 1G vg0

[root@host2 ~] lvcreate --name data --size 1G vg0

[root@host2 ~] lvcreate --name logs --size 1G vg0

Note: If you don't have any Volume Group available but have empty partition available. Click here to learn more about how to create Volume Groups/LVMs.

The new LVMs would be used as a resource, managed (and synchronized between hosts by DRBD); for this reason a new DRDB resource file must be created in the /etc/drbd.d/ directory; the contents should look like this:

The same configuration file must be copied over to the same location on the second host:

scp /etc/drbd.d/r0.res host2:/etc/drbd.d/

At this point, I would disable firewall on host1 and host2 to allow communication on required ports (7789 and 7788)

Next, meta data should be created for the DRBD resource (r0), on each host perform following:

[root@host1 ~]# drbdadm create-md r0

[root@host2 ~]# drbdadm create-md r0

Now we should start DRBD daemon on both hosts (run the command about the same time on each host, because DRBD need to communicate with each other).

[root@host1 ~]# /etc/init.d/drbd start

[root@host2 ~]# /etc/init.d/drbd start

In order to create the file systems (and go on to store useful data in it), one of the hosts must be made

primary for the 'r0' resource:

[root@host1 ~]# drbdadm -- --overwrite-data-of-peer primary all

Now that the devices are available on drbd0/drbd1, so it is possible to create a file system on it

[root@host1 ~]# mkfs -t ext4 /dev/drbd0

[root@host1 ~]# mkfs -t ext4 /dev/drbd1

Install and configure MySQL

MySQL binaries should be installed on both of the servers.

[root@host1 ~]# yum install mysql-server mysql -y

[root@host2 ~]# yum install mysql-server mysql -y

Create following directories on both hosts:

mkdir /var/lib/mysql_data_drbd

mkdir /var/lib/mysql_binlog_drbd

chown -R mysql:mysql /var/lib/mysql_data_drbd

chown -R mysql:mysql /var/lib/mysql_logs_drbd

At this stage we should mount DRBD devices (on primary host1), so that we can initialize MySQL data directory:

[root@host1 ~]# mount /dev/drbd0 /var/lib/mysql_data_drbd

[root@host1 ~]# mount /dev/drbd1 /var/lib/mysql_logs_drbd

[root@host1 ~]# mkdir /var/lib/mysql_data_drbd/data

[root@host1 ~]# mkdir /var/lib/mysql_logs_drbd/logs

Ensure that host1 is currently primary and DRBD backing devices are up.

Edit the /var/lib/mysql_data_drbd/my.cnf file and set datadir=/var/lib/mysql_data_drbd/data and log_bin=/var/lib/mysql_logs_drbdb/logs in the [mysqld]

section. Also ensure that the socket is configured to /var/lib/mysql/mysql.sock and the pid file to

/var/lib/mysql/mysql.pid.

Here is MySQL configuration file on host1:

Create symlink for MySQL options file on both hosts:

[root@host1 ~]# rm -f /etc/my.cnf

[root@host1 ~]# cd /etc

[root@host1 ~]# ln -s /var/lib/mysql_data_drbd/my.cnf my.cnf

[root@host2 ~]# rm -f /etc/my.cnf

[root@host2 ~]# cd /etc

[root@host2 ~]# ln -s /var/lib/mysql_data_drbd/my.cnf my.cnf

Now install default MySQL database files:

[root@host1 ~]# mysql_install_db --no-defaults --datadir=/var/lib/mysql_data_drbdb/data --user=mysql

Start MySQL server

Lets start MySQL server and insert some test data:

[root@host1 ~]# mysqld --defaults-file=/etc/my.cnf &

[root@host1 ~]# mysql -e "CREATE DATABASE IF NOT EXISTS mysqlslap; USE mysqlslap; CREATE TABLE IF NOT EXISTS test (a INT, b VARCHAR(200)); INSERT INTO test VALUES (1, 'mysql drbd')"

Manual failover

It is possible to migrate MySQL/DRBD resource to alternative host, steps to be performed:

On host1 (currently primary):

# Stop mysql instance

[root@host1 ~]# mysqladmin -uroot -p shutdown

# umount drdb filesystems

[root@host1 ~]# umount /var/lib/mysql_data_drbd; umount /var/lib/mysql_logs_drbd

# Switch DRBD to SECONDARY mode

[root@host1 ~]# drbdadm secondry r0

On host2:

# Make this node PRIMARY

[root@host2 ~]# drbdadm primary r0

# mount drdb filesystems

[root@host1 ~]# mount /dev/drbd0 /var/lib/mysql_data_drbd

[root@host1 ~]# mount /dev/drbd1 /var/lib/mysql_logs_drbd

# Start MySQL server

[root@host1 ~]# mysqld --defaults-file=/etc/my.cnf &